Last updated on January 24, 2025

Microsoft Azure Logic Apps is mainly used for automating stuff across your Azure resources or even with 3rd party apps. This was definitely a game changer for IT admins to automate their usual tasks like sending an monthly disk utilization reports, CPU reports or updating app services certificates at the time of expiry.

Azure Databricks is a well-known Data Processing tool for creating Data Analytics solutions that uses AI. But I have seen customers struggling to connect with Azure Databricks workspace using the HTTP trigger because at the time of this writing, there is no managed-connector for Databricks available on Logic Apps.

In this blog, I’ll share a small problem that I came across while connecting Databricks to Logic App workflow using HTTP connector and how I fixed it. Lets go!

Objective

To run a job inside Databricks workspace using Logic App System-Assigned Managed Identity

Resolution

If you have the Logic App (Standard) already created, then skip ahead, otherwise, create a test Logic App with Standard SKU first using the following documentation by Microsoft here.

Once created, follow the below steps to achieve the objective:

- Go to Overview of your Logic App from the left-navigation menu.

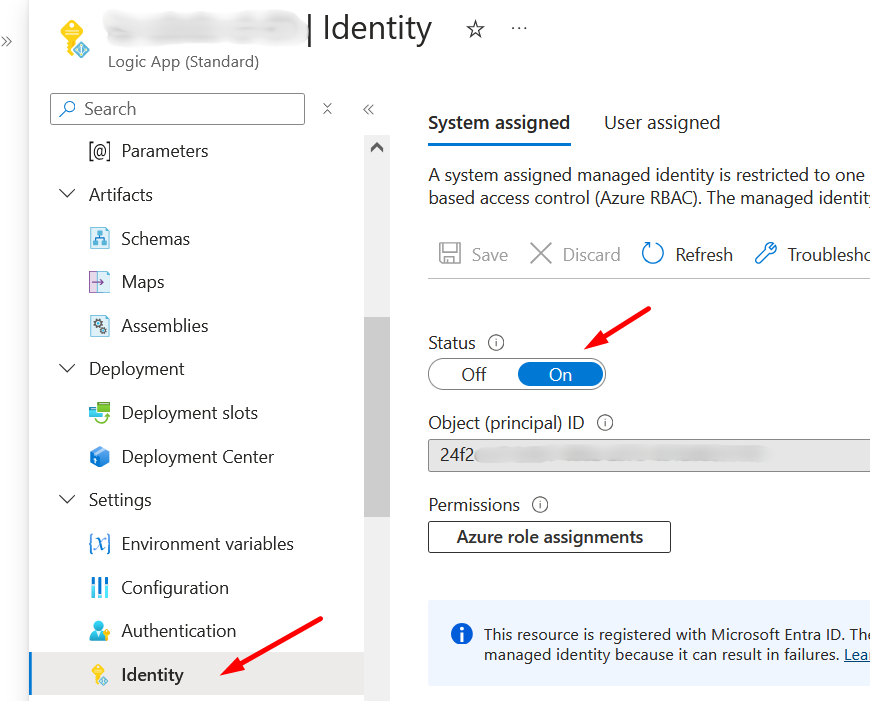

- Go to Identity section as seen in screenshot below and turn ON the System-Assigned Managed Identity.

Azure Logic App

3. Click on the Azure Role assignements for this system-assigned managed identity and give Contributor role on the Databricks resource.

4. Next, in the Logic App workflow designer, in the HTTP connector, choose the Authentication type as Managed Identity and then select System-assigned managed identity. In the Audience which is not a mandatory field, but I recommend to fill it with Application ID of the “AzureDatabricks” in your Entra ID Enterprise Application. This is a Microsoft-managed SPN for Databricks. Your configuration should look like the one seen below.

5. Next, follow this document and perform Step # 3 to assign your system-assigned managed identity to the Databricks workspace as service principal.

6. One last step is to provide the Manage or Owner role to the Databricks job to this service principal which is indirectly your system-assigned managed identity. Here is the Databricks document to do it. Manage identities, permissions, and privileges for Databricks Jobs

7. Run your Logic App workflow by passing any test parameters in the Body of the HTTP and see the results in the Databricks workspace which will run using the service principal.

Hopefully this blog will help you if you ever come across this problem and I wish your issue will be resolved after following the above steps because it did for me ;).

Happy Learning !

Be First to Comment